LLMs and Generative AI—buzzwords that seem to be everywhere these days, right? There's just no getting away from them.

In this blog, we’ll explore what LLMs are, how foundational LLMs are built, the opportunities they offer and the risks they pose.

What are LLMs?

LLMs are machine learning models trained on vast amounts of text data to predict the next word in a sentence. It might sound simple, but these models have proven incredibly powerful and effective across many tasks involving text and code. They’re the backbone of many AI applications you’ve probably encountered, from chatbots to code generation tools.

The heavy lifting behind foundational LLMs

Foundational LLMs are large, powerful machine learning models trained on vast amounts of text, serving as the base for a wide range of AI applications. Creating a foundational LLM is no small feat. It requires a huge amount of GPU time on large clusters, access to billions of training tokens, and some very specialized expertise. This isn’t something just anyone can do—it’s typically reserved for organizations with significant funding and resources.

That being said, LLMs are still widely available:

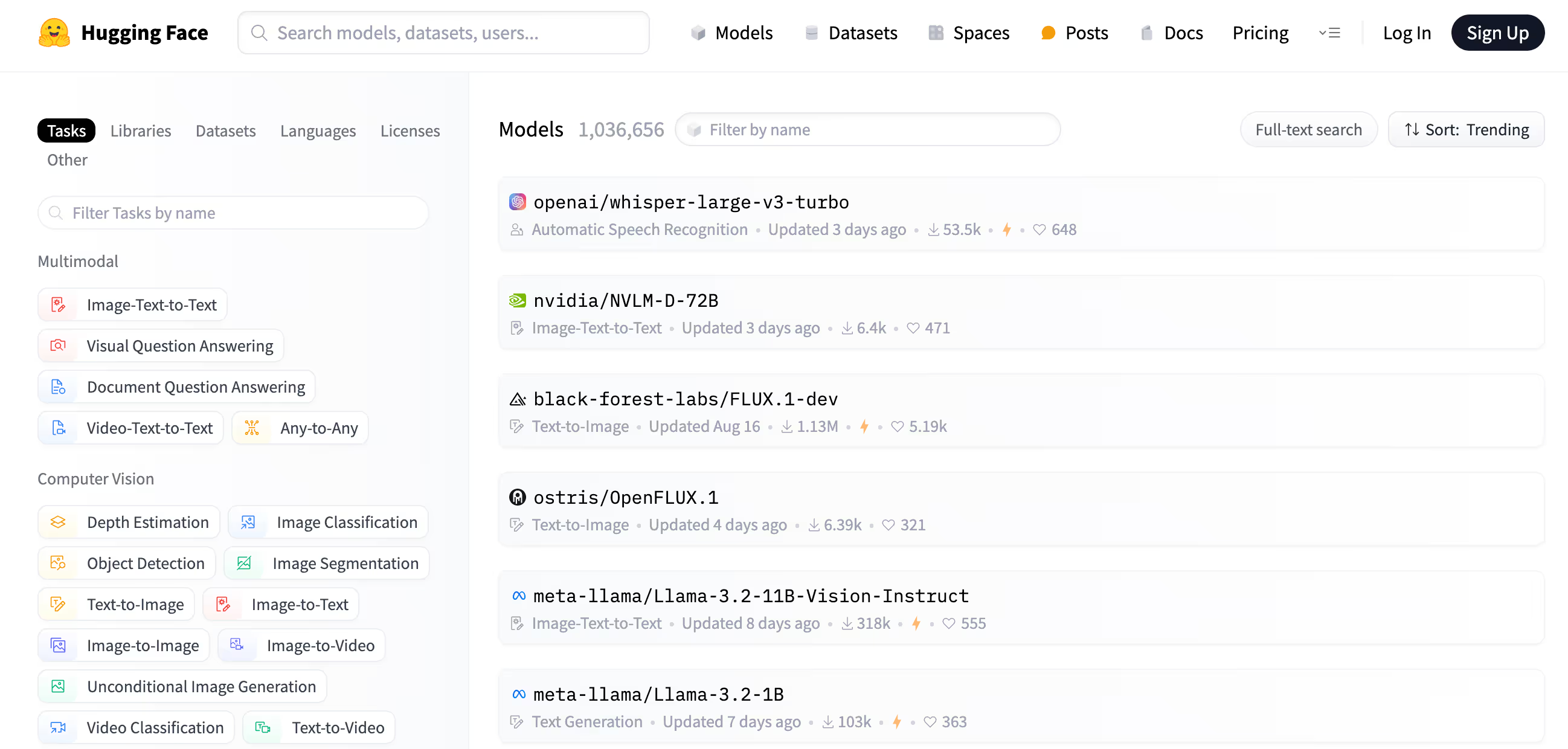

- Nearly 1,023,000 models are now available on Hugging Face.

- Around 220,000 datasets are accessible on the platform.

- Over 1 million model downloads have occurred recently.

Who’s building these models?

There’s a pretty clear divide when it comes to who builds these foundational LLMs. On one side, you have the commercial giants like OpenAI, Google, Microsoft, and Anthropic, who create these models at a high cost and deploy them through APIs or private clouds. The model weights are kept under wraps, not shared with the public.

On the other side, we have open-source LLMs. These models are built by organizations or individuals who then release them to the public, allowing anyone with the right hardware to run them. However, just because a model is open source doesn’t necessarily mean it’s free for commercial use. Licensing restrictions and the datasets used for training can complicate things.

What can you do with LLMs?

Once you have a foundational LLM, there’s a lot you can do to build on top of it. Here are a few options:

Fine Tuning: This involves continuing to train a foundational LLM with additional, more specific data to tailor its performance to a particular use case. For example, if you want a model to excel in legal document analysis, you'd fine-tune it with a dataset of legal texts.

Weight Quantization: This process reduces the size of an LLM by compressing its parameters (or "weights") without significantly impacting its performance. The smaller, quantized model is easier to deploy on devices with limited computational power, like mobile phones or edge devices.

Instruction Tuning: This technique involves training the model to better understand and follow specific types of instructions. For instance, you might teach the model to excel at generating responses to questions or following step-by-step procedures, making it more effective in tasks like virtual assistants or customer service bots.

Alignment Tuning: Here, the model is trained to avoid generating harmful or toxic outputs. This tuning is crucial for ensuring that the AI behaves ethically and safely, especially in applications where it interacts with users, such as chatbots or content generation tools.

Un-censoring: This involves reversing some of the alignment tuning to allow the model to generate content without certain restrictions. It’s a controversial approach and is typically used in research or very specific use cases where less filtered output is desired.

Reinforcement Learning from Human Feedback (RLHF): In this method, a model is fine-tuned based on preferences expressed by human users. For example, humans might rank the quality of different responses generated by the model, and this feedback is used to adjust the model to align more closely with human preferences, improving its relevance and usefulness.

Model Merging: This process combines the strengths of multiple models to create a new, improved model. By merging models, developers can create a hybrid that benefits from the unique capabilities or knowledge of each original model, resulting in better overall performance.

These processes are made easier by a vast ecosystem of open-source tools that have emerged to support LLM remixing and deployment. For example, platforms like Hugging Face have become the go-to place for developers to discover and share LLMs, offering everything from model hosting to deployment tools.

The risks of using LLMs

With the current explosion of interest in generative AI lots of enterprises experiment or deploy Large Language Models (LLMs). While there are multiple commercial LLM offerings there is a huge and very active ecosystem of OSS LLMs mostly housed in Hugging Face. These LLMs are in many ways just another dependency that brings operational and security risks in the organization that uses them. Users of LLMs need ways to gain visibility into these risks.

Operational Risk:

When it comes to evaluating and running open-source large language models (LLMs), several operational risks can arise:

- License Complications: LLM licensing can be tricky. The model's license may differ from the datasets it was trained on, and the outputs might have their own licensing issues.

- Unproven Models: Using models with questionable lineage or trained on low-reputation datasets is risky. These models might not perform well or could lack important transparency about their training process.

- Tuning and Alignment Issues: Some models may not be properly tuned or aligned, potentially generating toxic or harmful outputs. It’s hard to verify this from metadata alone, and standardized benchmarks are needed to evaluate this effectively.

- Intentionally Malicious Models: There’s always a risk that models could be trained to deliver offensive or incorrect results, either broadly or in specific cases.

- Performance Uncertainty: Unlike traditional open-source code, it's harder to measure if a model performs well until it’s tested on your specific use case. Test data and leaderboards can give some insight but often aren't enough to ensure reliable performance in real-world scenarios.

Security Risk:

When downloading machine learning models, there are several security risks to be aware of:

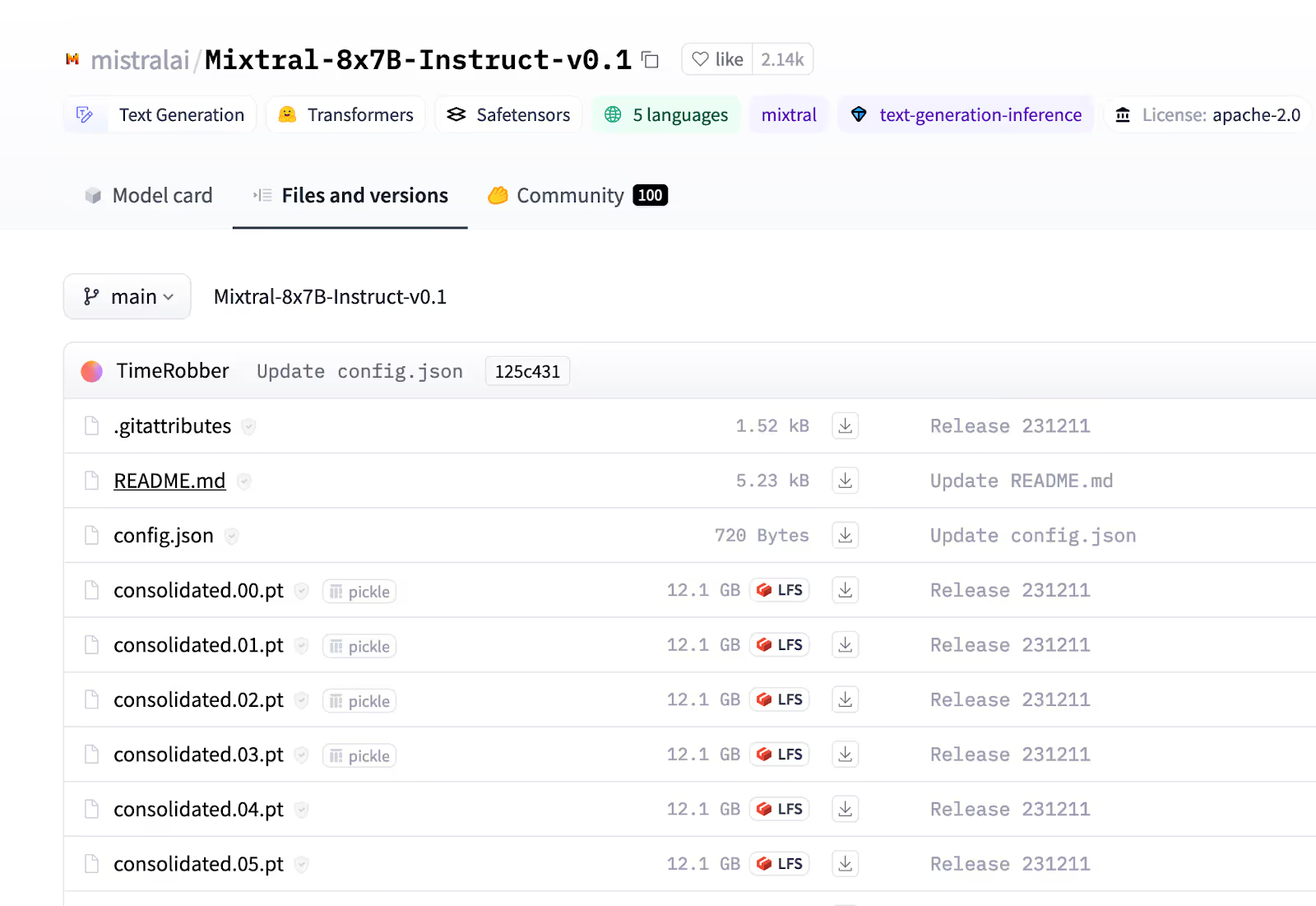

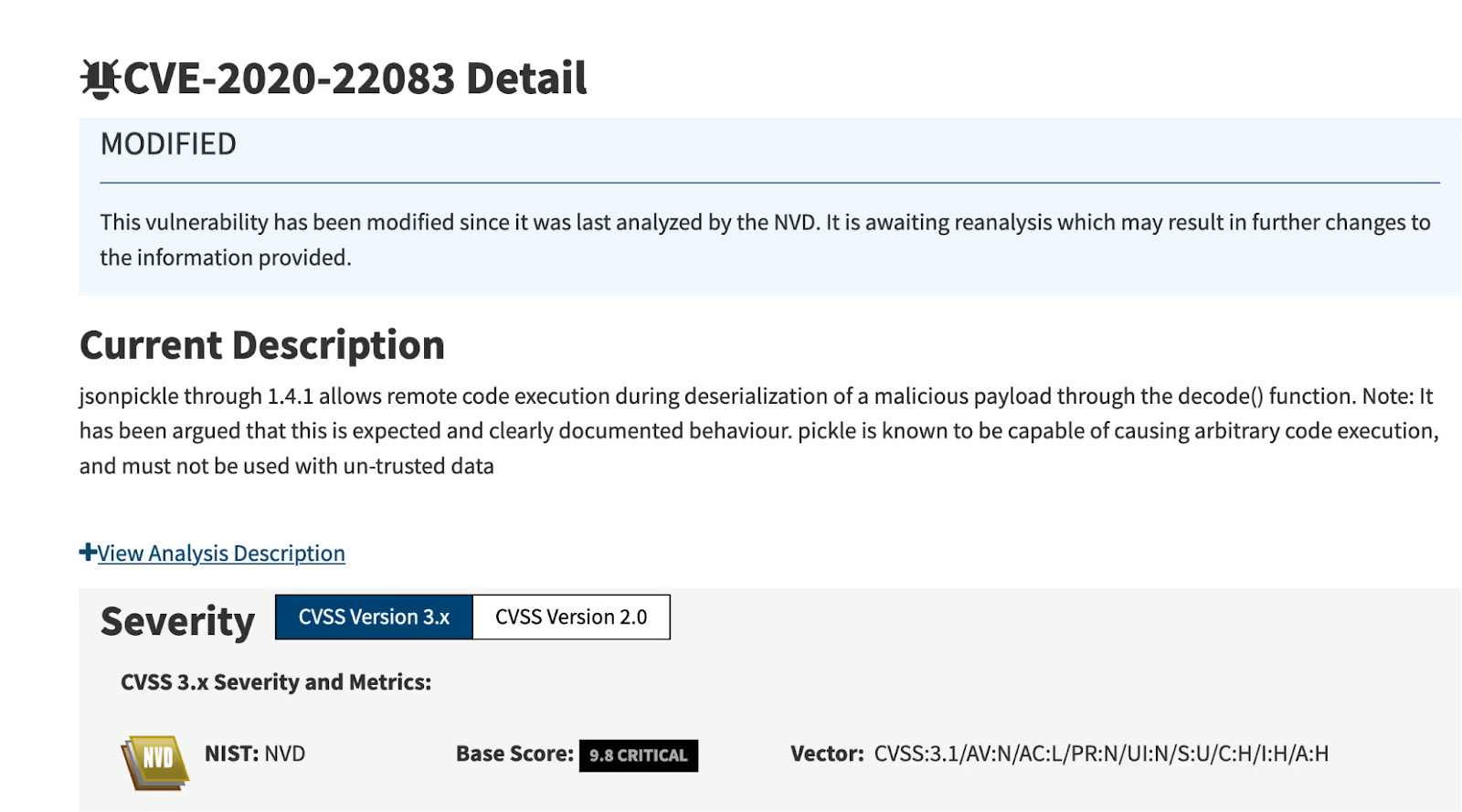

- Malicious Code in Models: Some models may contain malicious code, especially those packaged in the older pickle format. Newer formats like safetensors mitigate this risk, and platforms like Hugging Face scan for unsafe usage of pickle.

- Malicious or Vulnerable Dependencies: Models often require additional code, which could be compromised. This includes code snippets in readme files or scripts within the model repository that may introduce vulnerabilities or malicious dependencies.

- Links to Malicious Repositories: Models may include links to external repositories, which could be malicious or vulnerable. While users may not always use these repositories, if they do, there’s a risk of infection.

- Denial of Service (DoS) Attacks: Models can be intentionally malformed to crash systems, leading to a DoS attack.

- Embedded Malicious Binaries: Recently, methods have emerged where models are embedded inside binaries. Running these binaries can create a server, exposing users to direct attacks.

- Compromised Accounts and Models: HF account compromises can lead to malicious models being uploaded. Attackers can also hijack legitimate models, adding malicious content or replacing them with harmful versions.

- Private Model Leaks: There’s a risk of private models being leaked, though it's unclear how widespread this is.

- Supply Chain Attacks: Common tactics like typosquatting are also prevalent, where attackers upload models with similar names to popular ones, tricking users into downloading malicious versions.

Book a demo to understand how Endor Labs turns your vulnerability prioritization workflows dreams into reality or start a full-featured free trial that includes test projects and the ability to scan your own projects.

40+ AI Prompts for Secure Vibe Coding

What's next?

When you're ready to take the next step in securing your software supply chain, here are 3 ways Endor Labs can help: